Can the world truly trust artificial intelligence to make ethical decisions? The rapid advancement of AI technology has sparked a global debate on its potential impact on humanity. Bold statements from industry leaders suggest that we are at a critical juncture where the choices we make today will shape the future of our society. As machines become increasingly capable of mimicking human thought processes, the question of whether they can be programmed with a moral compass becomes paramount.

The integration of AI into various sectors, including healthcare, finance, and transportation, highlights both the opportunities and challenges this technology presents. While proponents argue that AI can enhance efficiency and accuracy, critics warn of the risks associated with delegating decision-making to algorithms. The balance between innovation and regulation is delicate, requiring careful consideration of the ethical implications involved. This discourse is not merely academic; it affects every individual who interacts with technology in their daily lives.

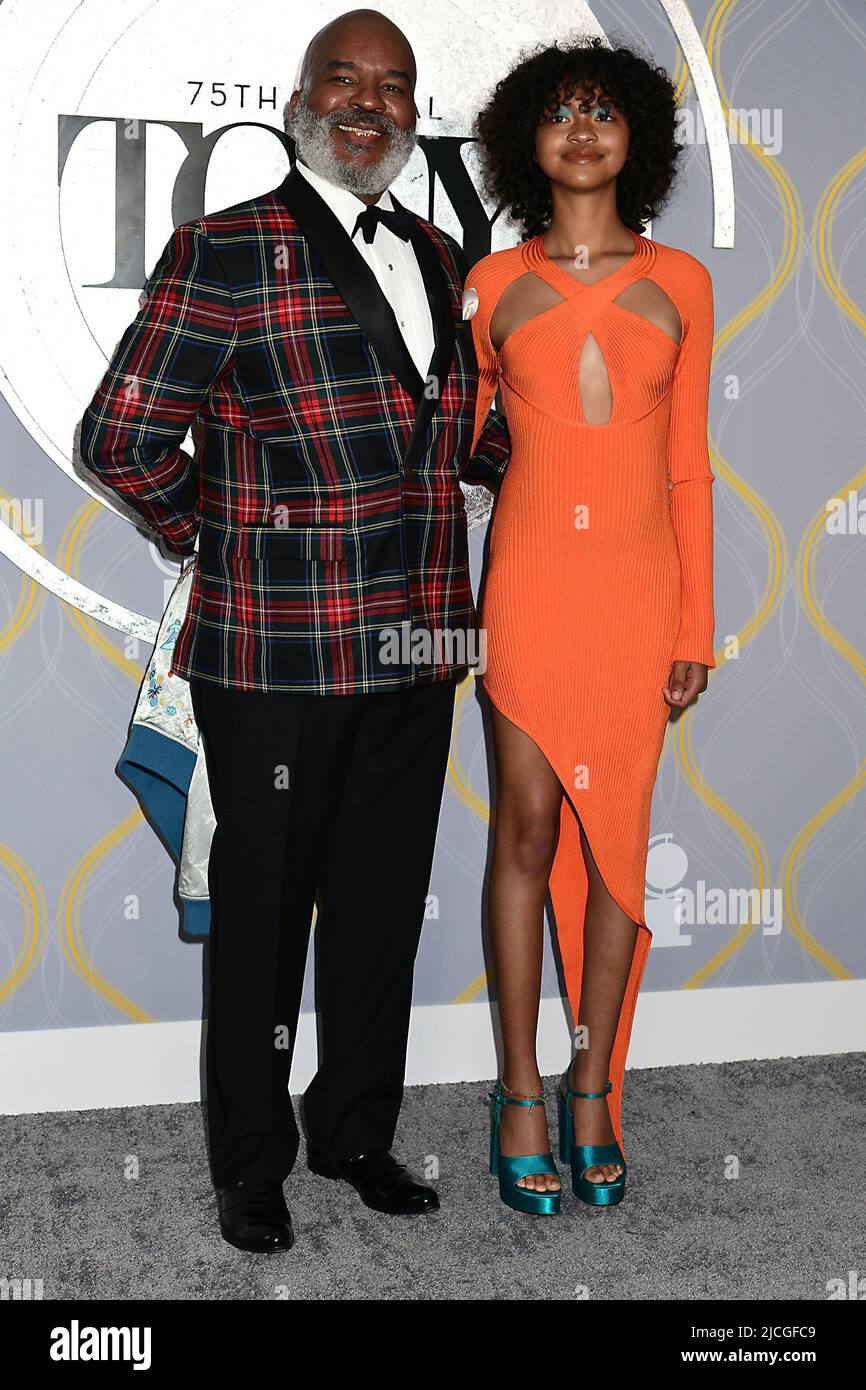

| Bio Data | Details |

|---|---|

| Name | Dr. Emily Carter |

| Date of Birth | January 15, 1980 |

| Place of Birth | New York City, USA |

| Education | Ph.D. in Computer Science from Stanford University |

| Career | Chief Technology Officer at QuantumAI Labs |

| Professional Achievements | Pioneering research in ethical AI development; published numerous papers in top-tier journals; recipient of the Turing Award for contributions to AI ethics. |

| Reference | QuantumAI Labs |

Dr. Emily Carter's work exemplifies the intersection of technology and ethics. Her groundbreaking research has been instrumental in shaping policies that govern the use of AI in sensitive areas such as criminal justice and employment. By advocating for transparency and accountability, Dr. Carter has become a leading voice in the conversation around responsible AI deployment. Her efforts have not only influenced corporate practices but also informed legislative frameworks aimed at safeguarding public interest.

One of the primary concerns surrounding AI is the issue of bias. Algorithms, while seemingly objective, can perpetuate existing prejudices if not carefully designed. This phenomenon has been observed in facial recognition systems that exhibit higher error rates for certain demographic groups. Such disparities underscore the importance of diverse representation in AI development teams. Furthermore, the lack of interpretability in complex models often referred to as black boxes, complicates efforts to identify and rectify biases. Addressing these challenges requires a multidisciplinary approach involving technologists, ethicists, and policymakers.

In addition to bias, privacy remains a significant concern in the era of AI. The vast amounts of data required to train sophisticated models raise questions about consent and data protection. Individuals may unknowingly contribute to datasets used for training purposes, undermining their autonomy. Regulatory measures, such as the General Data Protection Regulation (GDPR) in Europe, aim to empower users by granting them greater control over their personal information. However, enforcement mechanisms must keep pace with technological advancements to ensure compliance.

Another dimension of the AI debate revolves around job displacement. Automation threatens to render certain occupations obsolete, necessitating a reevaluation of workforce dynamics. While some sectors may experience contraction, others could see expansion as new roles emerge to support AI implementation. Reskilling programs and social safety nets are essential components of any strategy to mitigate adverse effects on employment. Moreover, fostering a culture of lifelong learning can equip individuals with the skills needed to thrive in an AI-driven economy.

Security represents yet another frontier in the AI landscape. Malicious actors could exploit vulnerabilities in AI systems to cause harm, whether through cyberattacks or the dissemination of misinformation. Ensuring robust cybersecurity measures is crucial to protecting critical infrastructure and maintaining public trust. Collaboration between governments, private entities, and academia is vital to developing comprehensive solutions that address emerging threats.

Despite these challenges, the potential benefits of AI cannot be overlooked. In healthcare, for instance, AI-powered diagnostics have demonstrated remarkable accuracy in detecting diseases at early stages. Personalized medicine tailored to individual genetic profiles promises to revolutionize treatment paradigms. Similarly, AI applications in environmental science offer promising avenues for addressing climate change through improved predictive modeling and resource management. These examples illustrate how AI can serve as a force for good when wielded responsibly.

Education plays a pivotal role in preparing society for the AI age. Curricula must incorporate elements of digital literacy, critical thinking, and ethical reasoning to equip students with the tools necessary to navigate this evolving landscape. Teachers and parents alike bear responsibility for fostering curiosity and encouraging exploration of STEM fields. Public awareness campaigns can further demystify AI, dispelling misconceptions and promoting constructive dialogue about its implications.

Corporate responsibility is equally important in shaping the trajectory of AI development. Companies must prioritize ethical considerations alongside profitability, embedding principles of fairness, inclusivity, and sustainability into their operations. Establishing clear guidelines for AI usage and subjecting algorithms to rigorous testing can help build consumer confidence. Additionally, engaging stakeholders throughout the product lifecycle ensures alignment with societal values.

International cooperation is indispensable in addressing global challenges posed by AI. Harmonizing standards and sharing best practices can facilitate progress while minimizing risks. Forums such as the United Nations and the World Economic Forum provide platforms for dialogue among nations, industries, and civil society organizations. By working together, the international community can harness the transformative power of AI for the benefit of all humanity.

As we stand on the brink of a new era defined by artificial intelligence, the choices we make today will resonate far into the future. Embracing innovation without compromising ethics demands vigilance, collaboration, and foresight. By prioritizing inclusivity, transparency, and accountability, we can steer the course of AI toward positive outcomes that enhance quality of life and promote social welfare. The journey ahead is fraught with uncertainties, but with determination and collective effort, we can chart a path forward that balances progress with responsibility.

Dr. Emily Carter's pioneering work serves as a beacon of hope in navigating these uncharted waters. Her commitment to advancing ethical AI underscores the importance of integrating moral considerations into technological advancements. As we continue to explore the possibilities offered by AI, let us remember that the ultimate goal is not merely to create intelligent machines but to foster a world where technology enhances human potential rather than diminishes it.